Free Cooling in Summer Months: How to Keep Costs Down When Temperatures Rise14 min read

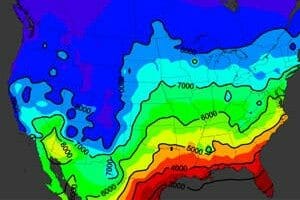

As we sneak up on summer, which has already unofficially hit with a vengeance in some areas out west and in the southwest, let us not lose sight of the opportunities to continue reaping the efficiency and economic benefits of free cooling our data centers, even as we eventually slip into those dog days of summer. While there will be spaces with mechanical plant design elements in place that may preclude fully exploiting the summer-time free cooling opportunities of a particular locale, good airflow management practices and general control discipline will nevertheless provide a path to extended free cooling benefits just about anywhere.

Airflow Management Discipline

Airflow management discipline is the key to extending access to free cooling into the summer months. While the winter in many northern data center sites may appear to diminish the importance of strong airflow management discipline – after all, for example, in Minneapolis during the winter the biggest airflow management challenge may be to re-circulate enough waste return air to mix with supply and thereby keep those air temperatures above some designated minimum, ranging from 41˚F up to 59˚F, depending on server class – practicing and improving all-year airflow management discipline has two important benefits.

First Benefit

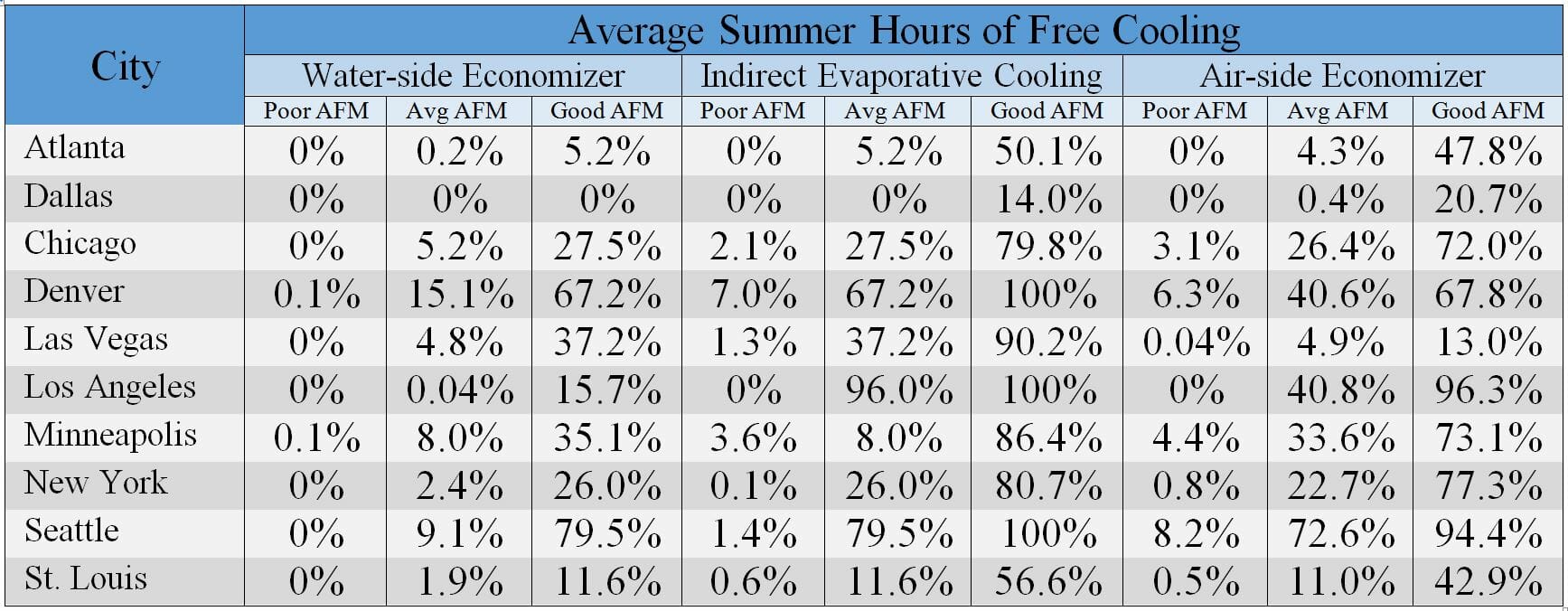

One of the benefits of all-year airflow management discipline hearkens back to the old adage for athletes and musicians: Practice doesn’t make perfect, PERFECT practice makes perfect. That is to say – good discipline begets good discipline. Therefore, in order to achieve the level of airflow management discipline to maximize summer time free cooling, those disciplines need to be in place all year long. These practices are not easy to turn on and off and they should actually be in a continuous process of further refinement. And what is the measure of success in establishing and maintaining good airflow management discipline? The answer is straightforward: the weighted average ΔT across cooling coils (or in versus out of an economizer) should be as close as possible to the weighted average ΔT across IT equipment and the volume of air supplied to the space should vary as little as possible from the volume of air consumed by the IT load. Perfection would be zero variance in both cases. Practically, a reasonably aggressive goal would be to have the room ΔT vary no more than 2˚F from the load ΔT and the supply volume vary no more than 10% from the demand air volume. (for a more complete discussion of this topic, see my blog titled, “The 4 Delta T’s of Data Center Cooling: What You’re Missing”) With these disciplines in place and demonstrated during the safer cool/cold time of the year, a maximum supply temperature can be established 2˚F below the maximum desired server inlet temperature thereby providing access to maximum free cooling hours. The Average Summer Hours of Free Cooling table below illustrates how significant that airflow management discipline can impact access to free cooling.

Second Benefit

The other key benefit of year-round airflow management discipline relates to using the ASHRAE X factor server reliability impact of different operating temperatures to push the envelope on the recommended maximum temperature envelope to access even more free cooling hours during the summer, or, heaven forbid … eliminate the mechanical plant altogether. For any readers who may not have yet been introduced to the practical elegance of the X factor, allow me a few sentences of overview summary.

The X Factor

The IT OEM’s represented on ASHRAE TC 9.9 gathered all the cumulative intelligence from customer warranty experience, onboard environmental conditions monitoring and volumes of anecdotal evidence to build a data base of server reliability at different temperature conditions. Rather than over-share with the enemy, they decided on a baseline failure/reliability experience at a baseline operating condition of 68˚F server inlet temperature and then employed all their accumulated history to determine expected failure incidents at temperature variations from that 68˚F baseline. The X factor then, is a percentage factor calculated by looking at all the hours in a year that servers ingested air below and above that baseline and then, based on their experiential model, the X factor could predict the effect of exposure to a particular temperature profile. For example, they looked at operating data centers with no mechanical cooling and allowing the free cooling to follow mother nature within a range of 59˚F minimum up to a bin-data-predicted maximum. They calculated a case study for Chicago with an X factor of .99, which meant allowing the data center to fluctuate between 59˚ and some maximum in the 90s would produce 1% fewer failures than if the data center operated at 68˚ all year. Conversely, they showed a 1.1 X factor for Dallas, meaning a 10% increase in expected failures with no mechanical cooling versus operating all year at 68˚. (More detailed explanation can be found in ASHRAE TC 9.9 white paper, “2011 Thermal Guidelines for Data Processing Environments: Expanded Data Center Classes and Usage Guidance,” or Upsite’s white paper, “How IT Decisions Impact Data Center Facilities: The Importance of Mutual Understanding”.)

Before applying the X factor to increasing summer free cooling hours, I would like to just clarify a couple points about this very valuable tool. While half of the cities studied by ASHRAE showed X factors that would result in fewer server failures in data centers that operated without any mechanical cooling than in data centers that would operate 24/7 at 68˚F, it’s clear that the other half all indicated an expectation of increased failures, ranging from 1% up to nearly 30% for Miami. While any number above 10% on first blush would seem like an unacceptable increase in failures, let’s apply a little context. First, if a particular data center in Dallas or Phoenix normally experiences three server failures in a year, then 10% in Dallas would = 3.3 and 20% in Phoenix would = 3.6 failures. So in Dallas it might take three years to see that extra failure and in Phoenix it might take two years to actually experience the additional failure, and neither failure might actually happen, based on technology refresh cycles. Furthermore, the baseline is based on 68˚F server inlet temperature 24/7, all year long. In reality, our sample data centers in Dallas or Miami are not likely supplying 68˚ constantly stable server inlet temperatures, but rather have what we called our average experience in the first half of this discussion where a 68˚ supply air produced inlet temperatures ranging from 70˚ to 80˚F. Therefore, the actual failure experience is not based on the theoretical temperature baseline but rather on some varying higher temperatures, which means the failure experience is based on an X factor that is already well above 1.00.

That previous discussion was not a digression. Rather that level of understanding the X factor explains why cold weather airflow management discipline is critical to expanding access to summer time free cooling. By tightly controlling the variance between the room ΔT and the IT load ΔT, you can increase your collateral of hours between 68˚F and whatever your SLA lower temperature limit is, and the bigger that deposit is, the more hours in summer you can allow to creep into the space between the recommended maximum inlet temperature and however close to the allowable temperature threshold you are comfortable encroaching, without negatively impacting your server reliability experience. In other words, the summer free cooling hours in the table in the first half of this discussion are all based on living within the ASHRAE recommended temperature envelope, and I think many readers may have been surprised by the extent of that free cooling. Now, by banking some cooler hours during the winter, the X factor can allow you to creep above your recommended threshold without any deleterious effect on your data center’s primary, critical mission.

Conclusion

In conclusion, I hope it is now clear that those hazy, lazy days of summer are definitely not incompatible with free cooling. In fact, with solid airflow management discipline coordinated with the appropriate type of economization, it is reasonable to expect data center free cooling for anywhere from 50% to 100% of the summer hours in most U. S. locales. Furthermore, applying that discipline during the winter to bank some X factor collateral, can further expand summer free cooling by occasional incursions into the allowable temperature ranges.

Ian Seaton

Data Center Consultant

Let’s keep in touch!

Airflow Management Awareness Month

Free Informative webinars every Tuesday in June.

0 Comments