The Importance of Mutual Understanding Between IT and Facilities – Part 3: Specifying Equipment that Breathes from Front-to-Back16 min read

Not doing so violates hot and cold aisle segregation, resulting in the need for lower set points and higher air volume delivery, and therefore reduced total and redundant cooling capacity and higher operating costs.

Servers are not the only equipment IT management is tasked with specifying for the data center: the number of network switches may not approach the number of servers, but they are still critical to the effective functioning of the IT enterprise. The selection process for switches will typically consider how many ports are required, whether it will be a core switch or distribution switch with layer 3 or router functionality, whether it can be unmanaged or must be managed, whether speed and latency or raw volume is most important, and what might be required for power over Ethernet and redundancy. In addition, switches are typically sourced with some attention to scalability, plans for virtualization, ease and speed of application deployment and speed of application access. Investigating and sourcing switches should be closely tied to the overall business needs.

To the degree that efficiency and profitability also fall into the area of core business goals, the effect of the switches on the overall effective airflow management of a data center should also be part of that overall criteria checklist. As far as the mechanical health of the data center is concerned, all equipment should be rack-mountable and breathe from front to rear. This criterion is a recognized best practice from sources as diverse as the European Code of Conduct for Data Centers, BICSI-002, and ASHRAE TC9.9. If only it were so simple.

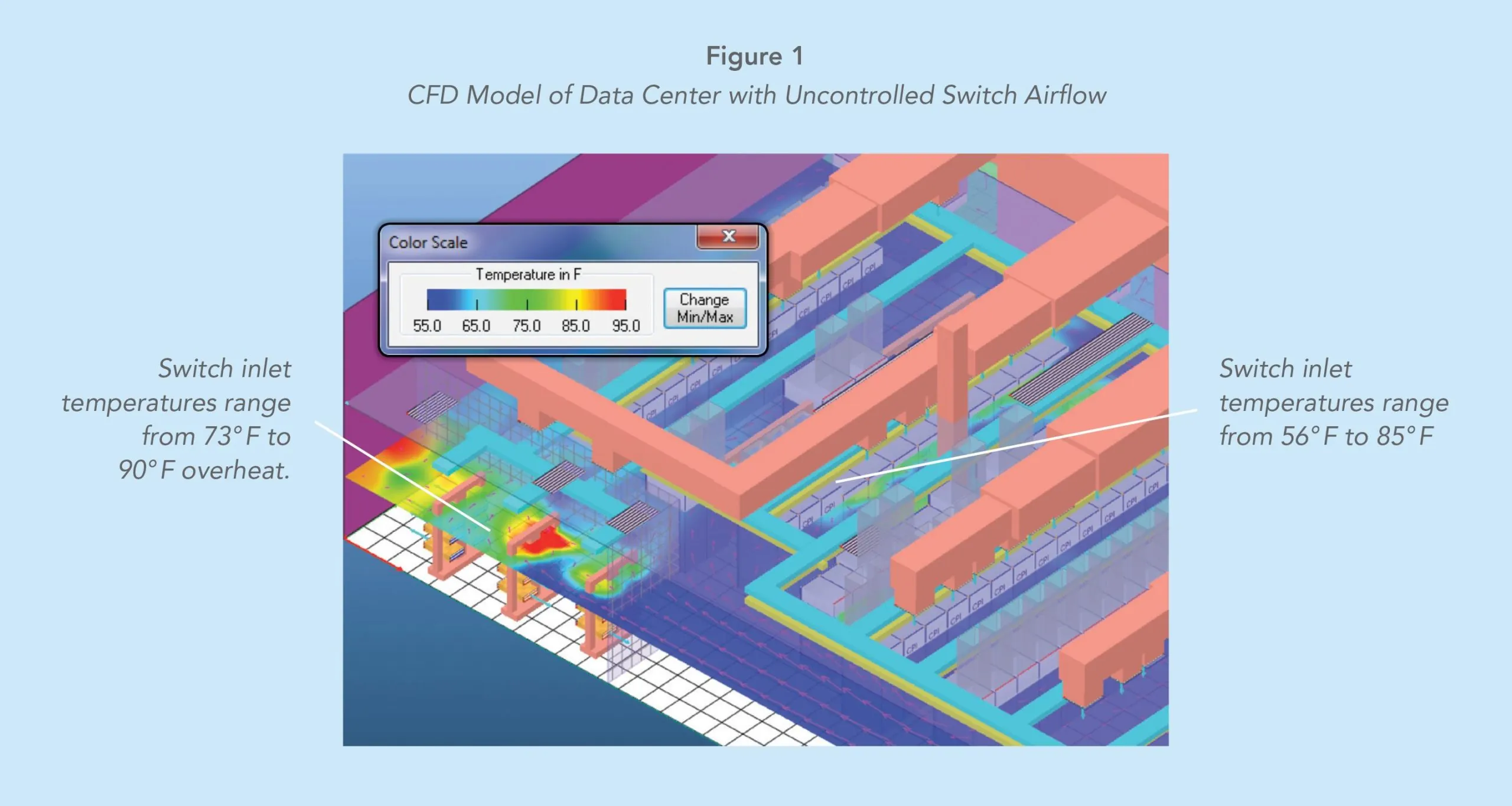

Nevertheless, even though there may be a small fraction of switches in a data center compared to the number of servers and storage devices, the effect of non-standard airflow can be staggering. An example of a 3500 square foot 514kW computer room is illustrative of the effect of uncontrolled non-standard breathing network hardware. Figure 1 below shows the effect of this uncontrolled airflow. There are six racks in the point of presence entrance room with only 3kW per rack, but the equipment in one rack is ingesting inlet temperatures ranging from 73° F to 90° F and one 6kW core switch rack is ingesting air ranging from 56° F to 85° F. While those temperatures were actually allowed by the manufacturers’ specifications, they exceed the owner’s internal SLA’s, but more importantly they undermined the otherwise efficient design of this space. All the server cabinets were equipped with exhaust chimneys coupled to a suspended ceiling return space and the supply air was ducted directly into all the cold aisles. In addition, ceiling grates were located around the uncontained cabinets and racks to capture the heated return air. Nevertheless, in order to minimize the number of hot spots and the severity of those hot spots, the cooling system was configured with a 72° F set point, resulting in 54° F air typically delivered through the overhead ducts. In addition, the cooling system needed to deliver 82,500 CFM to meet the 67,900 CFM demand of the IT equipment.

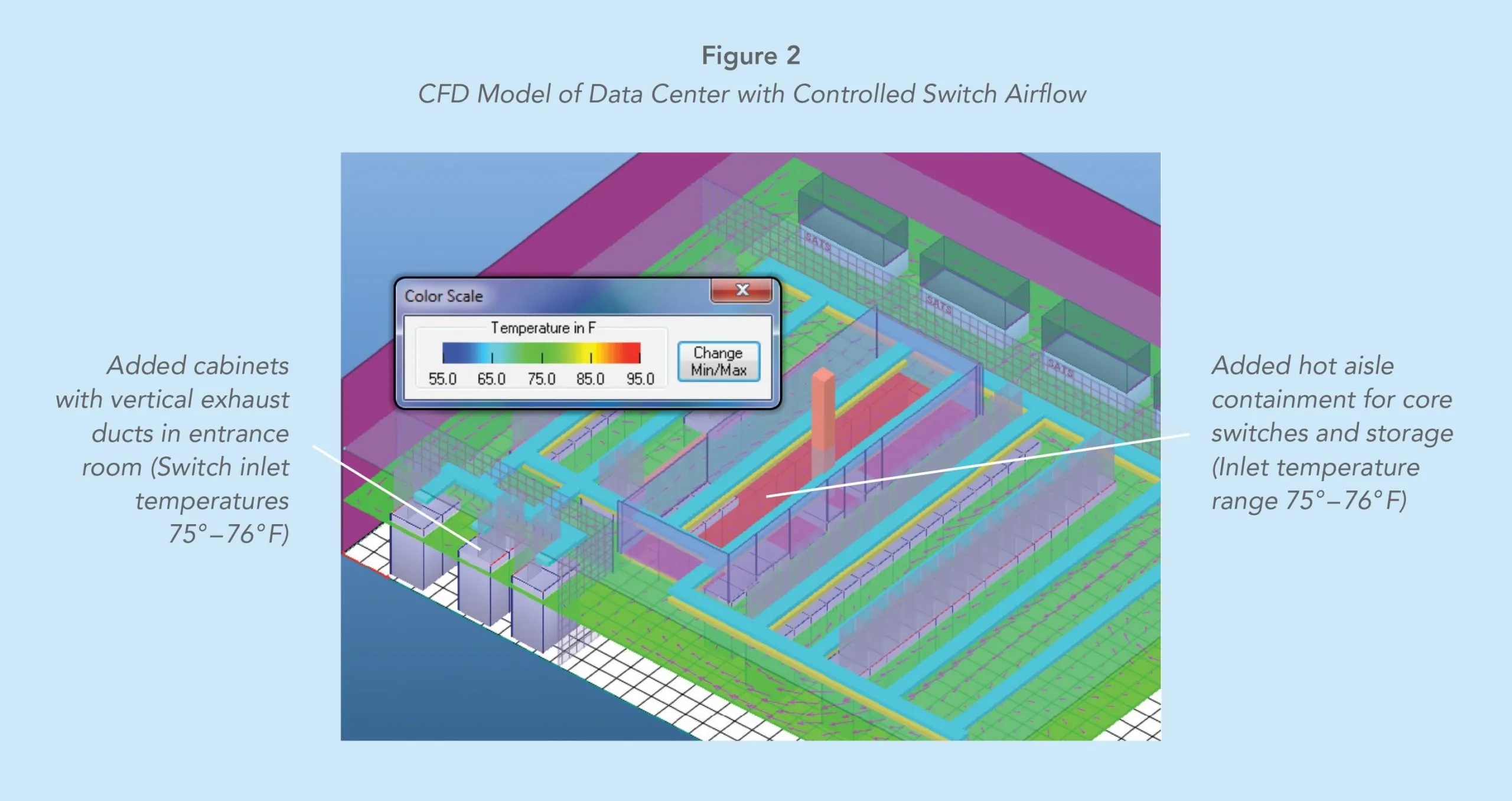

Contrast those results with a model of the same data center with all the return air effectively contained. Side-breathing switches were re-deployed in cabinets that redirected the airflow into a front-to-rear topology, and those cabinets were then captured in a hot aisle containment structure. In the entrance room, the side-to-front and rear-to-front switches were removed from open two-post racks and redeployed in cabinets equipped with rack-mounted boxes, which redirected the airflow into a standard front-to-rear topology, and then those cabinets were equipped with vertical exhaust ducts.

In addition, because these changes eliminated all the hot spots throughout the room, the need for the overhead duct delivery system was eliminated, removing that cost from the total capital plan. Figure 2 illustrates the results of these changes. Not only is the temperature more consistent and hot spots eliminated, the supply temperature was raised from 54° F to 75° F, resulting in energy savings of 38% for the chiller plant. Furthermore, the airflow requirement to maintain all equipment inlet temperatures between 75° – 76° F dropped from 82,500 CFM to 72,000 CFM, resulting in a 33.5% CRAH fan energy savings, based on the non-linear fan law relationships discussed earlier in this series.

All relevant best practices and standards dictate that proper airflow management can only be achieved with equipment that breathes from front to rear. Unfortunately, other application criteria often result in selections of non-conforming equipment. Fortunately, there are ways to change any equipment into exhibiting an output behavior equivalent to front-to-rear airflow. For large switches that breathe side-to-side, there are now wider cabinets available with internal features that will redirect that airflow into conformance with the rest of the data center. For smaller-profile switches that may have front-to-side airflow, or side-to-front or side-to-rear or even rear-to-front, there are rack mount boxes available that will redirect any of these airflow patterns into conformance with the rest of the data center. These boxes will typically consume one or two extra rack mount units, but they will maintain the integrity of an otherwise well-designed and -executed space. Some storage equipment will present similar challenges to the data center manager; however, some form of correction is available and should be utilized to either maximize the efficiency of the data center mechanical plant or to increase the effective capacity of that mechanical plant.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor,

manage, and maximize the power and cooling infrastructure for critical

data center environments.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor, manage, and maximize the power and cooling infrastructure for critical data center environments.

Ian Seaton

Data Center Consultant

Let's keep in touch!

0 Comments