How A-4 Servers Could Impact the Future of Data Center Airflow Management10 min read

What is an A-4 class server and how might they impact the future of data center airflow management?

In 2011, ASHRAE TC9.9 updated their environmental guidelines for data processing equipment and added four new server classes, distinguished by their allowable inlet temperature ranges. Class A-4 servers have an allowable range of 41⁰F to 113⁰F. The other major contribution of this update was establishing the “X” factor as the basis for a methodology for determining an acceptable number of hours per year to operate at these wider temperature ranges. In a nutshell, the “X” factor is the reliability level (failure rate) that should be expected for operating the data center at a constant 68⁰F all year. They then determined failure rates for operating at cooler temperatures and at hotter temperatures and provided a methodology for allowing the data center temperature to fluctuate, following mother nature, and to calculate an estimated impact on reliability as compared to the 68⁰F constant X factor baseline.

Depending on the data center location, predicted reliability could be either better or worse than the X factor and, even if worse, perhaps within an acceptable pain threshold. For example, if reliability decreased 3% in a data center of 1000 servers with a normal failure rate of 2%, the 3% increase would predict an increase from 20 failures to 20.6 failures, which may be a fair trade-off for not incurring the capital cost of building a chiller plant or the operating cost of performing any mechanical refrigerant cooling. Theoretically, then, we would assume that deploying A-4 servers would stretch that envelope so that 3% failure rate for A-2 servers might be only 0.5% for A-4 servers in the same climate conditions. Unfortunately, that logical result doesn’t appear quite that simple or realistic.

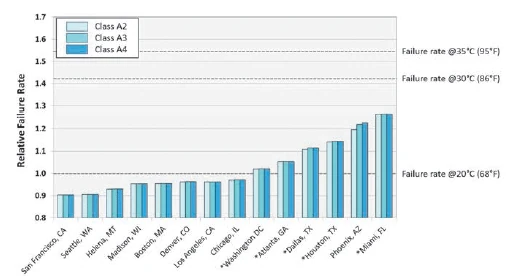

Chart 1: Failure rate projections for air-side economizer for selected U.S. cities.

Chart 1, from Appendix I, “Equipment Reliability Data for Selected Major U.S. and Global Cities” in ASHRAE’s Thermal Guidelines for Data Processing Environments, third edition, indicates negligible or non-existent failure rate differences for Class A2, Class A3 or Class A4 servers within the same meteorological conditions. Therefore, for the time being, the extra dollars paid for a Class A-4 server means better warranty coverage, but not necessarily better performance. Remember, the ASHRAE TC9.9 IT sub-committee consists of representatives from most of the major IT OEMs and their published guidelines will reflect a threshold to which everyone could agree. There will be some equipment vendors who are producing or in the process of developing true Class A-4 servers based on actual performance capability. As this equipment becomes available and as the price premium over standard A-2 (50-95⁰F) and A-3 (41⁰F-104⁰F) servers diminishes, we are looking at the potential for significant changes in the data center industry.

The most obvious and profound change that could be facilitated by functional and reasonably priced class A-4 servers would be the elimination of what we currently understand as the data center mechanical plant. Based on the data from Chart 1, data centers could already be built and operated in San Francisco, Los Angeles, Seattle, Denver, Boston and Chicago with Class A-2 servers without any mechanical refrigerant cooling. A true A-4 server would add most of the other cities to the list, and then extremely hot and dry locations like Phoenix could integrate Class A-4 servers with evaporative or indirect evaporative cooling to eliminate the chiller plant or any refrigerant cooling. Note that exploiting the benefits of Class A-4 servers does not absolutely preclude exposing the data center to any concerns associated with outside free air cooling. There are plenty of indirect heat exchange technologies, such as water-side economization, indirect evaporative cooling and re-purposed energy recovery wheel air to air heat exchangers that can effectively eliminate the need for chillers and refrigerant cooling with A-4 servers.

One might think that a much more relaxed approach to airflow management could be another effect of deploying Class A-4 servers, but these occasionally hot data centers still might not be terribly forgiving of lackadaisical airflow management practices. For example, as hot as the supply air might get, it is still removing heat from the electronic equipment. While the ΔT, or temperature rise through the servers, may not be as high as normal when operating at these elevated temperatures, mixing 110⁰F supply air with uncontrolled, re-circulated 120-130⁰F exhaust air is still going to cause hot spots – real hot spots. In addition, bypass airflow is still bypass airflow and responsible for wasted fan energy. Finally, there might be a whole new area of airflow management to keep the supply air out of the cold aisle. While that may sound a little counter-intuitive, if the supply air could occasionally get over 100⁰F, that might not be the most pleasant working condition for IT staff, who probably didn’t spend all that time in college to work in conditions similar to a central Texas roofer in July. Therefore, in hotter climates, in order to fully exploit the advantages of Class A-4 servers, the next frontier for airflow management could very well be creating structures and accessories to keep both the supply air and the return air separated not only from each other but also from the human-occupied space of the data center.

Ian Seaton

Data Center Consultant

Let’s keep in touch!

1 Comment

Submit a Comment

Airflow Management Awareness Month

Free Informative webinars every Tuesday in June.

Thank you Ian. It will be fun to watch air flow management practices and products evolve to keep our IT friends “cool” while the ITE plays in the 3 digit temp range.