What You Need to Know About Managing the Return Plenum14 min read

Back at the birth of data center thermal management as a topic of discussion for only a very small circle of friends, I tried to carve out my position of expertise in a field most folks in the industry didn’t even know was a field yet with a three prong message: 1) Get rid of hot aisle perforated floor tiles (purloined unapologetically from Dr. Bob himself), 2) Top-mounted cabinet fans delivered more harm than good, and 3) Forget about your return air (after isolating it, of course).

So why would I say to forget about your return air and then twenty years later write a blog about managing the return plenum? Perhaps it is just keeping with the season to disavow our sordid past and stake out new expedient territory. Or perhaps reality has changed – what was true then is no longer true now. Or perhaps, both are true and this is the Seaton Paradox of Hot Air Paraconsistent Logic.

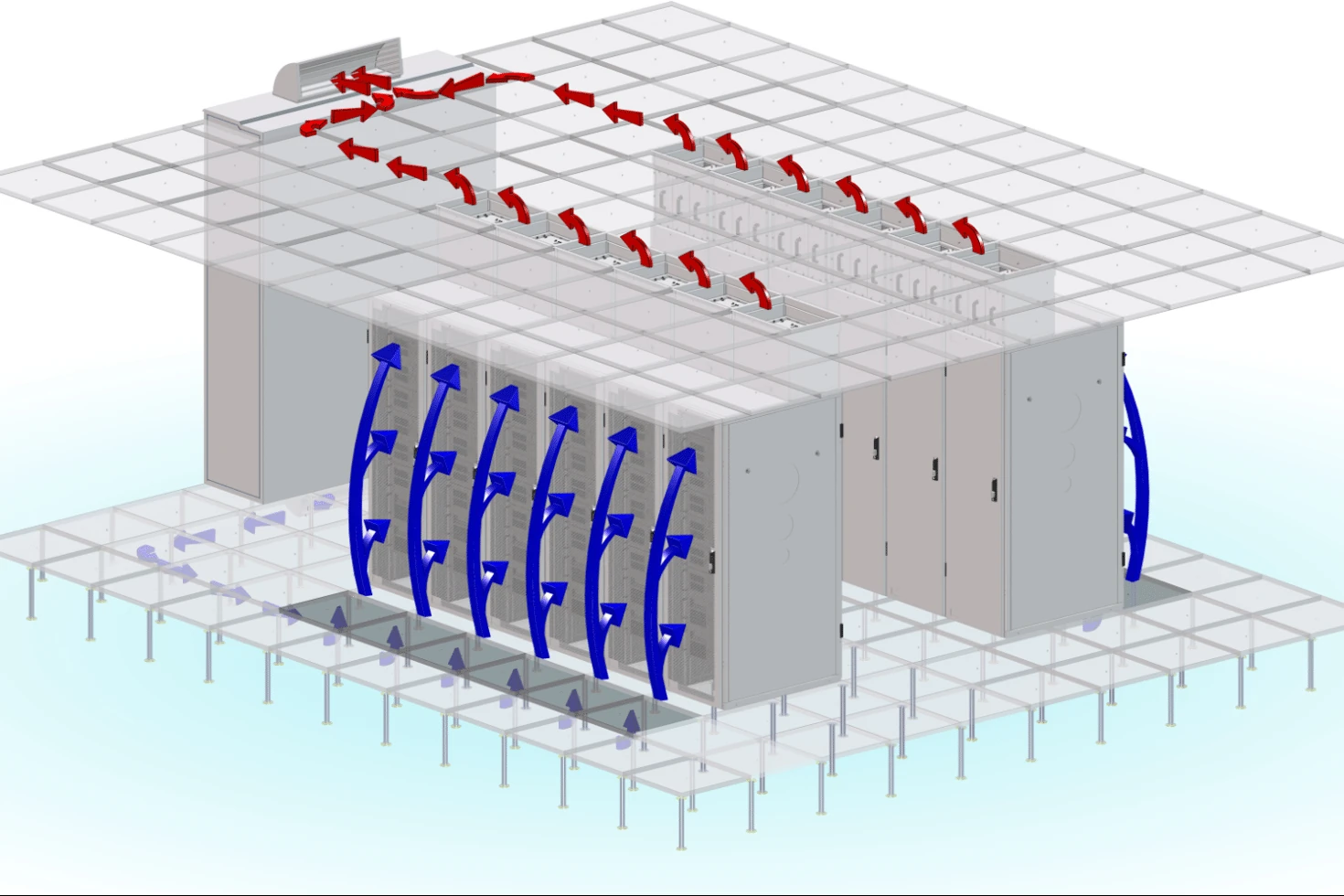

In the beginning, it was critically important to stress the necessity of ignoring the return air, at least after it was isolated from the rest of the data center or, back then, the computer room. In those days there was no dichotomy of comfort cooling versus data center cooling – there was just cooling. As a result, as data center cooling became an art form and a science, we needed to identify some distinctions. An easy starting point was the model of comfort cooling with a thermostat that controlled the room temperature by responding to and controlling the return air temperature. The point of departure was with good airflow management practices we could isolate that return air from the data center so it had no impact on anything that was going on with our data communications hardware. It’s out of the room; forget about it. That point of departure then opened the door to talk about supply set-points and server inlet control points and the benefits of exploiting the periodically relaxed maximum operating temperature thresholds of our equipment. In retrospect, I suppose some of us were, in fact, a bit amusing in our passionate diatribes: Why are you even thinking about your return air? Just get it out of the room and move on!

Well, let’s temper that just a little bit – just get the hot air out of the room and keep it out of the room, which brings us to a pretty basic element of return plenum management that I would hope goes without saying. Best practices tell us to separate supply air and return air in the data center as completely as possible, but those practices extend into the plenum as well. If a return plenum is just a re-purposed space above a suspended ceiling, let’s just make sure that ceiling barrier is maintaining the integrity of our supply and return air separation. There are no mysteries here – no missing ceiling tiles or vents anyplace other than in hot aisles and some form of plenum extension attached to our CRACs or CRAHs if, in fact, space utilizes traditional precision perimeter cooling. The discipline we have applied to sealing unused rack units, cable access ports, and non-standard flow paths should be applied to the return plenum as well.

The size of the return plenum is actually a sub-set to the discipline of maintaining integrity. The hard and fast rule here is to use the Darcy-Weisbach equation to understand the pressure head of a return plenum or run a computational fluid dynamics model study to validate that space volume and pressure differentials are adequate to support the necessary flow or utilize a commercially available duct-loss modeling tool. In addition to these scientific approaches, if we’re on a raised floor and our return plenum volume of space is larger than our supply plenum volume of space, we are probably going to be OK. If we’re not on a raised floor and we have any questions about the efficacy of our plenum volume (if you have a ten-foot ceiling in a thirty-foot tall building, you don’t have any questions), then we’re back to relying on Darcy-Weisbach, CFD, or duct loss modeling. A related issue is if there are any impediments to flow in that space. A common impediment is concrete or steel girders or beams or strengthening flanges. I have also encountered supply ductwork as an impediment to flow in spaces without raised floors. I did a CFD model for one client some years ago who had something like a 12” clearance under a large supply duct over a space deployed with chimney cabinets. Space was designed for 12kW cabinets, but a large part of two rows could only support 5kW per cabinet because of the impediment to flow.

The whole issue of the impediment to flow is driven by the need to maintain pressure differentials that assure the supply side of the data center is adequately pressurized to maintain necessary supply volumes to all equipment and that return pressures are low enough to facilitate the effective removal of warm waste air. With the maximum separation between cooling and warm air masses, and appropriate plenum sizing, when we are using traditional perimeter cooling equipment, the same fans are driving supply and pulling return and we can, therefore, control pressure differentials by controlling fan speed based on those space pressure differentials. This is a relatively straightforward management function and only gets complicated in larger spaces where there may be large pressure differentiations within a cold sphere. A further complication occurs with free cooling scenarios wherein supply air is delivered and the waste air is removed by separate fan systems. In these situations, it is important to have a pressure differential control system for the waste fans that maintain some minimum negative pressure differential against the supply side of the room – a large enough differential to assure we are not providing a cause for hot air re-circulation but low enough that we are not sucking bypass air through our ICT equipment. In practice, these pressure differentials can run around 0.01” H2O, but there is plenty of empirical research indicating they can be 0.005” H2O or even lower with effective air separation.

There are actually some occasions when it even behooves us to monitor the temperature in our return plenum. If we are deploying some form of air-to-air heat exchanger or indirect evaporative cooling or other forms of economization that provide us some partial free cooling benefit when the ambient condition is lower than the return temperature, then we want to be sure we are monitoring those temperatures closely enough to be sure we are shedding that load from our backup mechanical systems and reaping those cost savings. Accordingly, the higher the return temperature, the higher the number of partial free cooling hours, making all that hole-plugging discipline worthwhile. In addition, if we are looking to give ourselves an internal report card on the effectiveness of our airflow management initiatives, we can compare the temperature differential across our ICT equipment to the temperature differential across our cooling source: the closer the two differentials are to each other the better grade we can give ourselves.

So, according to the Seaton Paradox of Hot Air Paraconsistent Logic, you should totally disregard your return air in the design of your space and monitor pressure and temperature differentials within your return plenum to assess and maintain the health of your data center.

Ian Seaton

Data Center Consultant

Let's keep in touch!

0 Comments