The Importance of Mutual Understanding Between IT and Facilities – Part 5: Specifying Cooling Unit Set Points15 min read

Best practice is to specify maximum allowable IT equipment inlet temperature and let mechanical plant find its own level.

Managing temperature by thermostat set point frequently results in mechanical plant wasted energy and cycling or heating by cooling equipment.

While determining a data center set point may sound like a facilities decision, it is often dictated by IT management, leading to the normal round of “meat locker” jokes at data center conferences around the country. The plethora of conference papers and industry e-newsletters notwithstanding, it is still common to see 72° F set points in a large proportion of data centers today, and lower set points in the range of 68° F are still not uncommon. These set point decisions can be driven by inherent IT conservatism, precedent (“that’s the way we’ve always done it”), or by response to real or imagined hot spot problems. This latter hot spot mitigation motivation can be problematic. Remember that most data center cooling units will drop the temperature of air meeting or exceeding the set point by approximately 18° F, thus a 68° F set point could conceivably produce 50° F supply air, which could be cool enough to get us into dew point problems. At 50° F, saturation (or 100% RH or condensation) is reached with 55 grains of moisture per pound of dry air, a condition which would be met at any of the following data center control settings:

60% RH @ 65° F

50% RH @ 70° F

45% RH @ 75° F

36% RH @ 80° F

In other words, at 50° F, the cooling coils would start removing water from the air in the form of condensation. In the mechanical side of the data center, we call this latent cooling, which subtracts from the sensible cooling capacity of our cooling units. Therefore, it’s conceivable that the lower set point could actually reduce the cooling capacity of the data center mechanical plant. Related to this ironic outcome is a potential result that may stem from fighting hot spots by adding extra cooling units. While the decision of how to solve a cooling problem is not typically an IT decision, it is noteworthy because, as might be the case for reducing set points, the extra cooling units may produce the similarly-ironic result of reduced cooling capacity and exacerbated hot spot issues. What can happen is that a large over-capacity of supply air can end up short-cycling to the cooling unit without picking up any heat, resulting in return air below the set point, which will cycle off the cooling coils and just recycle the cooler air back into the data center. Therefore, rather than 55° F or 60° F air being pushed into the data center by those fans, that air may be 69° F or 70° F and actually raise the temperature of that part of the data center experiencing the hot spots.

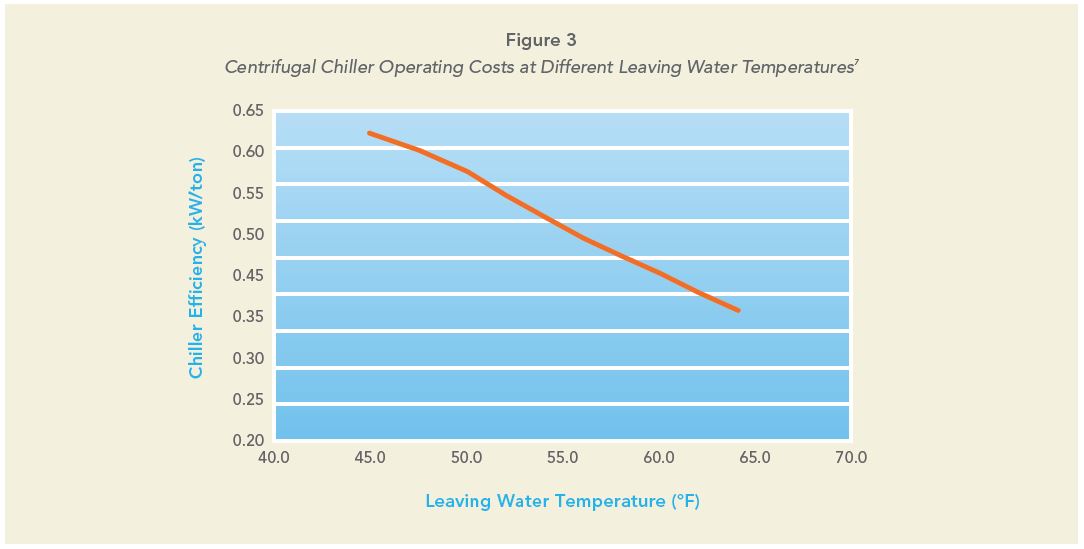

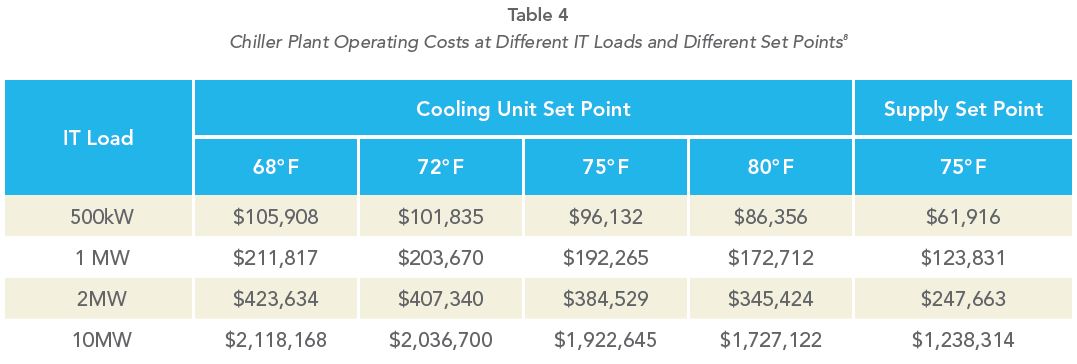

More importantly, arbitrarily-established low set points drive up the cost of running the data center cooling plant. Figure 3 shows kilowatt energy consumption of a highly efficient centrifugal chiller plant at different leaving water temperatures. Note that this level of absolute efficiency may not be achieved by older chillers or plants that have not been optimized, but the relative differences of the slope are instructive and many data centers can reasonably expect a steeper savings line. This line graph offers a conservative and defensible estimate of chiller energy use, and these values were used in calculating the actual energy costs in Table 4.

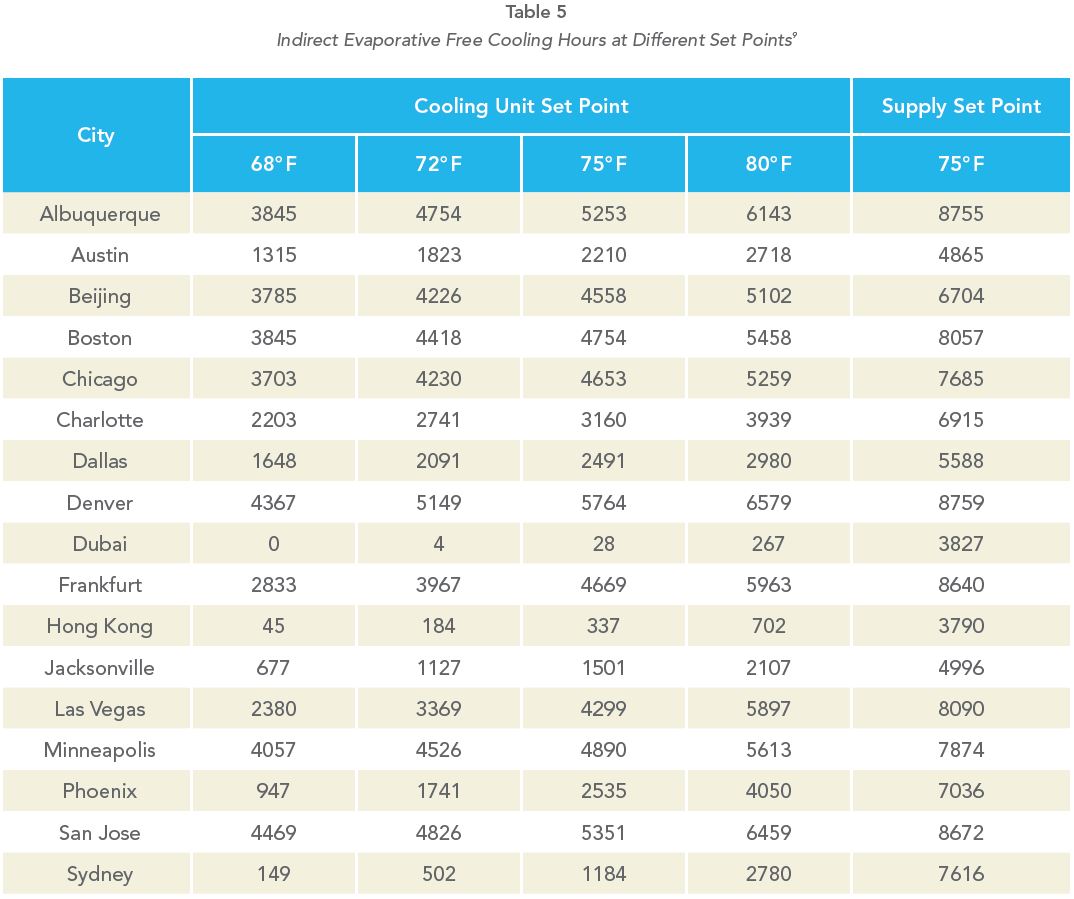

While Table 5 clearly shows the free cooling benefits associated with good set point management, it also shows for geographies such as Austin, TX; Dallas, TX; Dubai; Hong Kong; Jacksonville, FL; and Sydney, Australia, that with low set points there would not be access to enough free cooling hours to justify the expenditure for free cooling, whereas with good set point management, that expenditure is easily justified by one- to two-year paybacks. Therefore, IT management decisions regarding data center set points not only impact the overall operating efficiency of the data center, but the very architectural shape of the data center itself.

7ASHRAE Data Center Design and Operation Book #6: Best Practices for Datacom Facility Energy Efficiency, 2nd edition, 2009, p.40

8NB: Chiller tons based on 130% of IT load to account for battery room and electrical room cooling and capacity head room.

9NB: Free cooling hours based on hourly bin data for noted cities from 2007 and 2008. Figures will likely vary with more current data or with a ten-year sample, but relative differences between different set points are adequate for preliminary planning purposes.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor,

manage, and maximize the power and cooling infrastructure for critical

data center environments.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor, manage, and maximize the power and cooling infrastructure for critical data center environments.

Ian Seaton

Data Center Consultant

Let's keep in touch!

0 Comments