Data Center Connectivity: Fiber’s Edge Over Copper19 min read

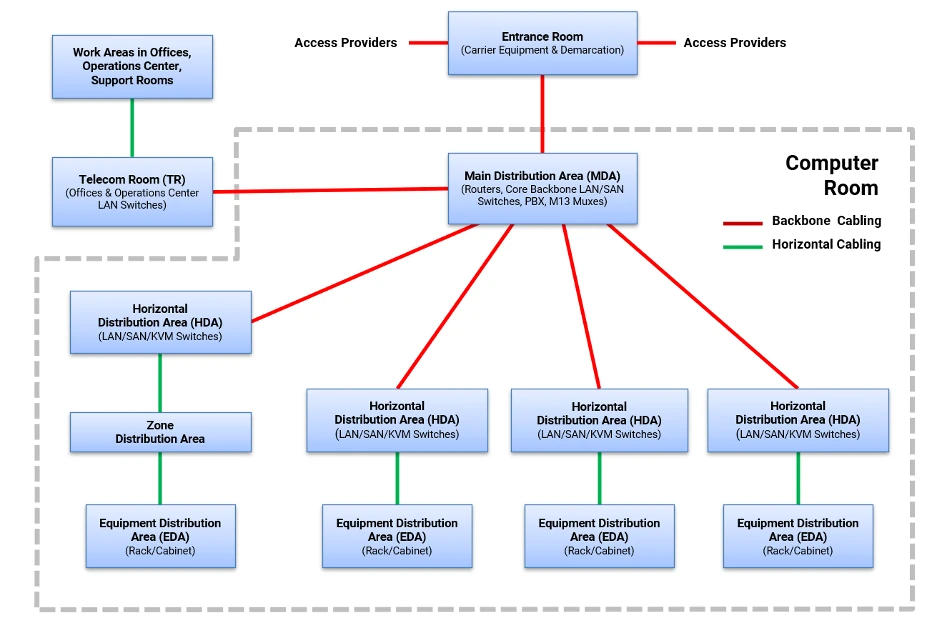

Optical fiber has long been the primary cabling media for switch-to-switch connections in the data center, whether between Main Distribution Areas (MDAs) and Horizontal Distribution Areas (HDAs) linking core and aggregation devices; between HDAs and Equipment Distribution Areas (EDAs) linking aggregation switches and access switches; or between HDAs and EDAs linking interconnection switches and access switches. Copper, in comparison, has remained as the primary media choice for in-cabinet switch-to-server connections at the EDA. But now the need for faster transmission speeds in highly virtualized server environments, combined with advancements in technology, are paving the way for fiber to displace copper in the EDA as well.

Copper Struggles to Keep Up at the Edge

10 Gigabit servers gained significant market share between 2010 and 2020, ultimately capturing more market share than 1 Gigabit servers by around 2015. For switch-to-server links in the EDA, 10 Gb/s transmission speeds cost effectively enabled category 6A twisted-pair copper cabling to be used in the for distances up to 100 meters in middle-of-row (MoR) or end-of-row (EoR) deployments. High-speed interconnects such as twinax direct attach cables (DACs) also support 10 Gb/s in short-reach top-of-rack (ToR) deployments where switches in each cabinet connect directly to the servers in that cabinet. For switch-to-switch links, 40 and 100 Gb/s fiber applications became the standard to support the upstream requirements of these 10 Gb/s server uplinks. Now the ever-increasing amounts of data and demand for higher-bandwidth, low-latency transmission are starting to render these speeds inadequate for many data centers.

While category 8 twisted-pair copper cabling ratified in 2016 supports 30-meter 25 and 40 Gb/s applications in MoR/EoR deployments, higher cost and power consumption have prevented this technology from truly coming to fruition. Furthermore, 40 Gb/s applications are being phased out due to new four-level pulse amplitude modulation (PAM4) encoding technology that enables 25 Gb/s and 50 Gb/s per lane signaling rather than 10 Gb/s per lane. This has shifted the original migration path of 10-40-100 Gb/s to a more efficient 25-50-100 Gb/s migration.

High-speed DACs have evolved to support these new server speeds in short-length, point-to-point ToR deployments, with SFP28 DACs supporting 25 Gb/s to 5 meters, SFP56 DACs supporting 50 Gb/s to 3 meters, and QSFP28 DACs supporting 100 Gb/s to 5 meters. However, their limited 3 to 5 meter length does not render them possible for use in MoR or EoR deployments. Short-reach ToR deployments also limit switch-to-server connections to only servers within a single cabinet, which is not ideal for highly virtualized distributed environments where multiple servers located in separate cabinets need to be connected to the same switch for efficient, low-latency server-to-server communication.

With the distance limitations of DACs and twisted-pair copper cabling essentially tapping out at 10 Gb/s, optical fiber is the most viable media for high-speed, flexible switch-to-server links for MoR and EoR applications. Since fiber transceivers have historically carried a much higher price tag compared to copper, the idea of bringing fiber into the horizontal to connect hundreds or thousands of servers may seem unfeasible from a cost perspective. However, PAM4 encoding combined with advancements in fiber transceiver technology are supporting lower-cost fiber deployments than ever before.

A Clear Winner in the Data Center

While optical fiber cable technology itself hasn’t really changed much, the innovation happening at the transceiver means that data center managers now have more cost-effective fiber applications to choose from for supporting 25, 50 and 100 Gb/s in switch-to-server links and 200 and 400 Gb/s in switch-to-switch links.

Based on previous non-return-to-zero (NRZ) encoding technology, 40 Gb/s over multimode (i.e., 40GBASE-SR4) used 4 fiber pairs and Base 8 MTP connectivity to transmit and receive at 10 Gb/s per pair up to 100 meters. But with new PAM4 encoding schemes, 50 Gb/s (i.e., 50GBASE-SR) can be transmitted over just one multimode fiber pair using duplex LC connectivity up to 100 meters. That’s more speed supported via 75% less fiber! In fact, some studies show that a 50 Gb/s duplex multimode fiber deployment now has the potential to be less expensive than a copper deployment using short-reach DACs—and without the distance limitation.

PAM4 encoding also means that 200 Gb/s (i.e., 200GBASE-SR4) can now be supported via 4 multimode fiber pairs using 8-fiber MTP connectivity and 400 Gb/s (i.e., 400GBASE-SR8) can be supported via 8 multimode fiber pairs using 16-fiber MTP connectivity. And it’s not just the encoding scheme that has evolved to make optical fiber the clear winner. Short-wave division multiplexing technology that allows for sending 50 Gb/s over two different wavelengths on a single multimode fiber (i.e., 100 Gb/s per fiber) now means that 400 Gb/s can be supported over just 4 fiber pairs (400GBASE-SR4.2). With a more efficient migration scheme, 400 Gb/s has broad market potential as it enables a single 400 Gb/s switch port to connect up to eight 50 Gb/s servers.

While singlemode fiber was once considered primarily a service provider technology for long-haul links, its greater distance and bandwidth capabilities have made it the preference for larger cloud and hyperscale data centers in recent years. Now advancements in singlemode transceiver technology are also making singlemode fiber a viable option for the data center. Using lower cost, low-power singlemode transceivers, new short-reach singlemode applications support 100 Gb/s over a single pair and 200 Gb/s over four pairs up to 500 meters without the expense associated with long-haul, high-power singlemode transceivers.

What Does This All Mean?

With optical fiber the clear winner moving forward for both backbone switch-to-switch and horizontal switch-to-server links, the use of MTP connectivity will continue to be widely deployed. Not only do MTP solutions support fast plug-and-play deployment, but they effectively support the new 25-50-100 Gb/s migration path for switch-to-server links, as well as 200 and 400 Gb/s switch-to-switch links. With the ability to connect a single 400 Gb/s switch port to up to eight 50 Gb/s ports, MTP-to-LC cassettes and MTP-to-LC hybrid assemblies are key components. Data center managers will also have more fiber to manage in pathways and at patching areas, calling for innovative data center solutions that effectively support high-density connectivity and enable superior cable management.

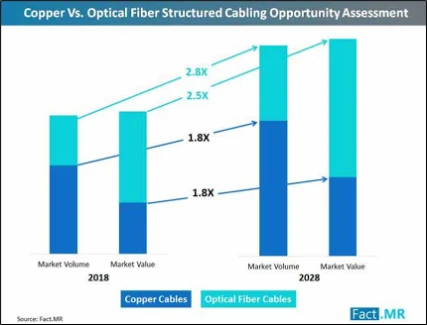

While optical fiber is clearly the winner for the data center, and its market volume is expected to increase at a rate of more than one and a half times that of copper between 2018 and 2028, that doesn’t mean copper cabling is going away any time soon. First of all, there are still plenty of 10 Gb/s servers that will remain in place over the next decade and many data centers will continue to use a ToR scenario with short-reach copper DACs. Copper will also still be deployed for lower-speed monitoring, centralized KVM and management across the data center.

In addition, twisted-pair copper cabling’s ability to cost-effectively support up to 10 Gb/s speeds and deliver power to devices using power over Ethernet (PoE) makes it vital to connecting and powering a wide range of devices both inside and outside of the data center—everything from Wi-Fi access points, surveillance cameras, and PoE lighting, to access control and audiovisual systems. Considering that the smart building market is expected to grow from $43.6 billion in 2018 to $160 billion by 2026, it’s safe to say that the future for copper is just as set in stone as that of fiber. With the emergence of 5G mobile technology, wireless communications will also continue to increase as a means to connect people and devices and bridge the digital divide.

But don’t forget that behind every smart building and every 5G cell tower are fiber backbones and data centers that are required to transmit, process and store information from the more than 46 billion and growing connected devices around the world. In fact, the Wireless Broadband Association predicts that it will take a total of nearly 1.4 million miles of fiber to provide full 5G service in the top 25 metropolitan areas alone. It’s no wonder why Facebook just completed 77 miles worth of fiber infrastructure to connect their data centers, Verizon is now deploying about 1,000 miles of fiber every month and AT&T just announced that they plan to bring fiber to 3 million locations across 90 metro areas by the end of this year.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor,

manage, and maximize the power and cooling infrastructure for critical

data center environments.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor, manage, and maximize the power and cooling infrastructure for critical data center environments.

Siemon

Backed by industry leadership, renowned technical service, a strong data center partner ecosystem and excellent supply chain logistics, Siemon doesn’t just help you design and deploy data center infrastructure for today, our experienced teams work with you to design, implement and deliver a high-quality infrastructure approach backed by a comprehensive service offering that prepare you for the challenges ahead. Learn more about how Siemon can help you maintain business continuity in 2021 and beyond.

0 Comments